DSC180 A08 Imitating Behavior to Model the Brain

UC San Diego, Halıcıoğlu Data Science Institute

walker

This is a project repository website for DSC 180 - A08

Background

Living animals are one of the primary sources of data for the development of artificial neural sys- tems. In general, animals are able to perform all of their behaviors and actions under the guidance of a single neural network and its underlying algorithms. One of the end goals for artificial intelligence systems is to emulate these complex neural networks in a virtual manner, to allow our creations to act in a way that replicates how a real animal would behave and learn. Embodied control in neuro- science holds the potential to deepen our understanding of how the real brain works. If a sufficient model of the brain can be created in conjunction with the behaviors that a biorealistically simulated agent generates, then we can quickly generate new hypotheses about real world mappings between the brain and behavior. To achieve this researchers within this field are working to decode all the parts of animals’ neural networks through inferential and replicatory techniques. One of the leading method to do so is to build models to learn how to behave like a real animal, mainly through deep learning models that emulate imitation learning or reinforcement learning. Prior research within this intersection of neuroscience and machine learning have explored methods to solve tasks given input data from a sensory channel, such as vision or sound. The particular approach taken in “Deep Neuroethology of a Virtual Rodent”, along with this replicatory work, focuses on understanding the underlying mechanisms of “embodied control” (Merel et al., 2019), which is how animals utilize a ”combination of their body, sensory input, and behavior” to accomplish tasks, with a particular focus on motor control. The implications of this study include furthering developments in understanding the underlying mechanisms of animal behavior within the fields of motor neuroscience and artificial intelligence.

Walker Agent Visualization

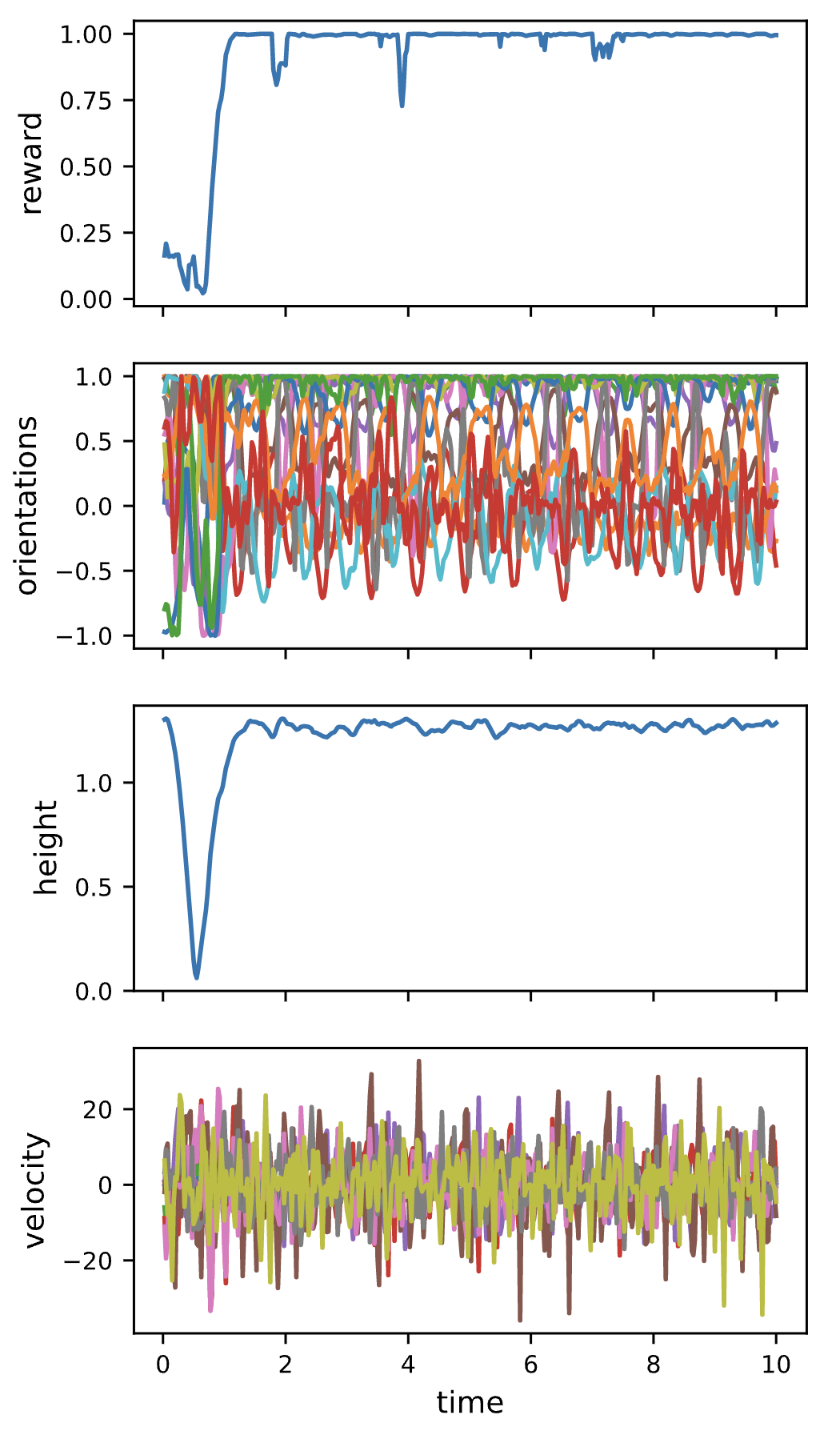

Online Model:

The online model seems to have more unstableness compared

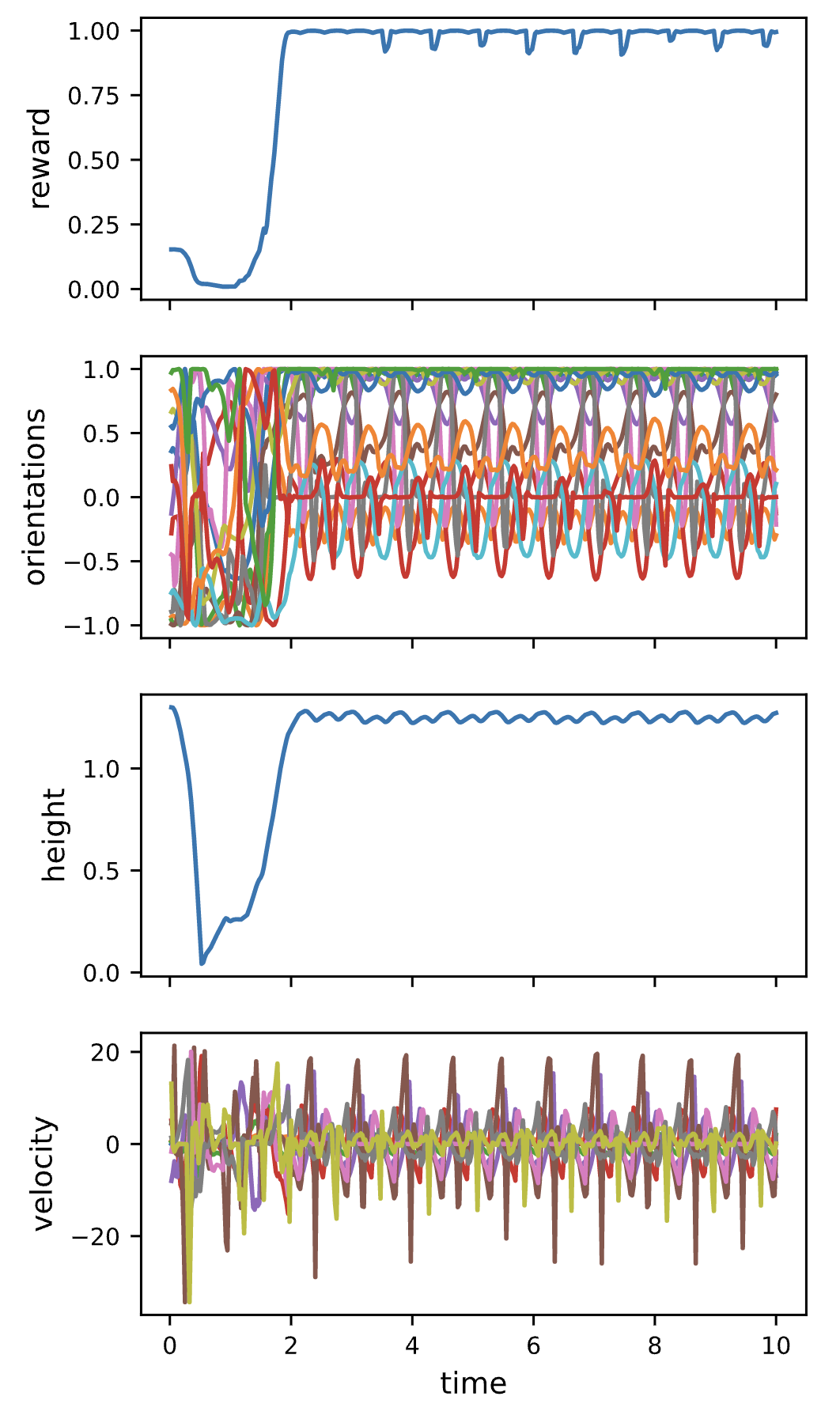

Behavioral Cloning (BC) Model

Side-by-side Comparison

TSNE Clustering of Kinematics

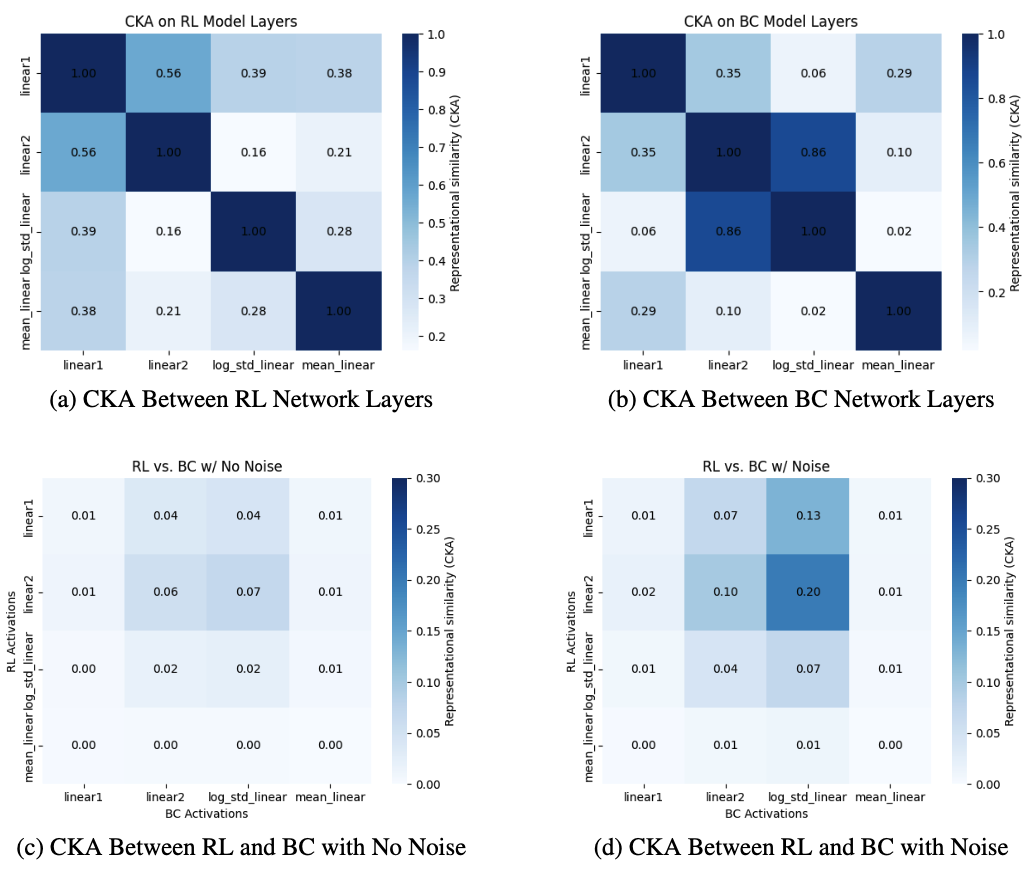

CKA Analysis